By Jim Tate, EMR Advocate

By Jim Tate, EMR Advocate

LinkedIn: Jim Tate

Host of The Tate Chronicles – #TateDispatches

Read more from Jim Tate

I recently wrote a post (The Need for Transparency in Healthcare – Part 1) that detailed the “criminal acts” (not my words, they come from the Department of Justice) between a major EHR developer and a well-known Big Pharma company. Those acts included bribes that allowed the Pharma company to corrupt the functionality of Clinical Decision Support (CDS) in healthcare technology. That is about as bad as it can get in terms of “cooking the code” for money at the expense of patient care. Now, with the entrance of AI into clinical decision support functionality, the door may be even wider for these types of events to occur.

It is time to talk about the role of AI in clinical decision support. There is a world of difference between evidence-based CDS and predictive-based CDS. Here are some key differences:

- Basis of Recommendations: Evidence-based systems rely on established research and guidelines, while predictive-based systems use data-driven predictions about future events or outcomes.

- Flexibility: Predictive-based CDS can adapt more dynamically to individual patient data, offering personalized insights, whereas evidence-based systems provide generalized recommendations based on broader clinical evidence.

- Purpose and Use: Evidence-based CDS aims to standardize care and ensure it aligns with the best available evidence, while predictive-based CDSS aims to personalize care by forecasting individual patient risks and outcomes.

Evidence-based CDS leverages the vast body of clinical research and guidelines to provide recommendations. These systems use data from systematic research and clinical guidelines to advise on best practices for patient care. Predictive-based CDS uses algorithms and statistical models to analyze large datasets and predict outcomes. These systems can leverage historical and real-time data to forecast future medical events or patient conditions.

ONC’s recent HTI-1 Rule (Health Data, Technology, and Interoperability) anticipated the introduction of AI and predictive-based clinical decision platforms into the healthcare domain. In terms of ONC Certification, the prior §170.315(a)(9) Clinical Decision Support criteria is being morphed to include possible predictive-based algorithms with the new §170.315(b)(11) Decision Support Intervention (DSI) criteria.

To prevent possible inherent biases in predictive-based algorithms. ONC has adopted a quality framework known as FAVES.

- “Fair (unbiased, equitable): Model does not exhibit biased performance, prejudice or favoritism toward an individual or group based on their inherent or acquired characteristics. The impact of using the model is similar across same or different populations or groups.”

- “Appropriate: Model is well matched to specific contexts and populations to which it is applied.”

- “Valid: Model has been shown to estimate targeted values accurately and as expected in both internal and external data.”

- “Effective: Model has demonstrated benefit and significant results in real-world conditions.”

- “Safe: Model use has probable benefits that outweigh any probable risk.”

If a health information technology developer intends to certify the (b)(11) DSI criteria only for evidence-based functionality there is now a higher bar for the types of source attributes that must be able to be documented. So even if the developer is not adding predictive-based functionality there is still additional work to be done by the 12/31/24 deadline.

Source attributes required for the current (a)(9) evidence-based CDS are:

- Bibliographic citation of the intervention

- Developer of the intervention

- Funding source of the intervention

- Release, and if applicable, revision date(s) of the intervention.

Upon the move to (b)(11) there are additional source attributes required:

- Use of race, ethnicity, language, sexual orientation, gender identity, sex, age

- Use of social determinants health data

- Use of health status assessment data

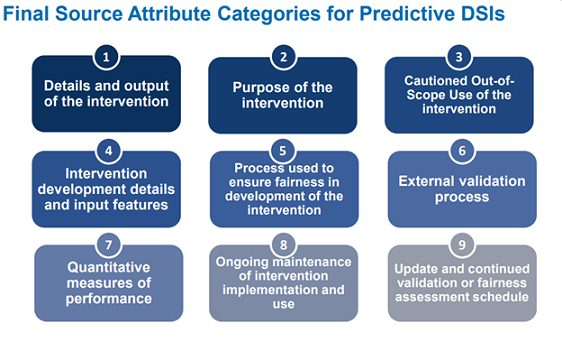

For those developers that intend to enter the predictive-based DSI world there are quite a few attributes that must be able to be documented. Courtesy of ONC here is some high-level information on the required attributes that are needed.

We have gone into the weeds in this discussion and I can assure you that there is still much territory to explore for clarification and best practices in the development and use of AI and machine-based clinical decisions rules and alerts.

At the highest level, this is what developers need to know, again from the folks at ONC.

- “Will have one year to update their certified health IT to support capabilities in 170.315(b)(11)”

- “Will need to provide updated technology to their customers by December 31, 2024”

- “Will need to provide summary IRM practice information to their ONC-ACB before December 31, 2024”

- “Will need to keep source attribute information and risk management information up-to-date as an ongoing maintenance of certification requirement”

- “Will need to include as part of Real World Testing Plans and Results”

The door is now wide open for AI to become directly involved in Decision Support Intervention. Concerned organizations are weighing in such as the World Health Organization which recently published a document entitled: Ethics and governance of artificial intelligence for health: Guidance on large multi-modal models. Let’s not forget the Department of Justice which has begun investigations to see if anyone is “cooking the AI code” at the expense of patient health.

We are entering an entirely new world when AI becomes directly involved in healthcare treatment decisions made by providers. My concern is not the known risks, but those we discover only in the initial stages of this technology’s use in the real world. Clinical trials are required before the FDA approves medications. Where are the clinical trials for AI in healthcare? Who is going to train providers in the best use of this powerful tool?