Separating The Hype From The Reality

Separating The Hype From The Reality

By Jared Crapo, VP, Health Catalyst

The term Big Data seems to be everywhere. Bloggers write about it, vendors describe their support for it, and many companies talk about what they have done with it. It seems like everyone has something to say about it, and it certainly is a trendy topic for discussion in the technology world. But what is big data really? And why does it matter for a healthcare provider? Below I present a framework for thinking about big data as it relates to healthcare.

What is Big Data?

In 2001, Doug Laney authored a report about data management for the Meta Group (now part of Gartner). While he didn’t call it big data, he described three characteristics of data that push traditional data management techniques and capabilities to their limits: volume, velocity, and variety. I define traditional data management techniques to mean SQL interactions with a relational database. While there are other proven approaches to managing data, SQL-based relational databases are by far the most widely used.

In addition to high volume, high velocity, and high variety, big data can also mean deeper examination and analysis of the data you already have. Let’s look at each one of these facets of big data, and how they apply in healthcare.

Healthcare Big Data: Volume

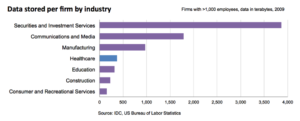

Most people intuitively assume that the large Wall Street securities and investment firms store enormous quantities of data, and that assumption is correct. For a select group of industries, the following chart shows the average quantity of data stored by each firm with more than 1,000 employees.

As you can see, the data stored by a typical healthcare firm is much closer in size to the data stored by a university than it is to that stored by a telecom or investment firm. Keep that in mind next time you read about how big data tools are a requirement for a cutting edge financial services company. Each industry has unique challenges, and there are no hard and fast rules for when you need a novel approach to store large quantities of data. Both technology and budget must be considered when designing a system for a particular analytic use case.

As you can see, the data stored by a typical healthcare firm is much closer in size to the data stored by a university than it is to that stored by a telecom or investment firm. Keep that in mind next time you read about how big data tools are a requirement for a cutting edge financial services company. Each industry has unique challenges, and there are no hard and fast rules for when you need a novel approach to store large quantities of data. Both technology and budget must be considered when designing a system for a particular analytic use case.

The technology components of a system (which include compute, interconnect, and storage infrastructure coupled with the operating system and database management system) have a massive impact on both performance and capacity. Historically, hardware speed and capacity have grown quickly while simultaneously dropping in price, a trend expected to continue. Modern operating systems and databases have removed many technical limits such as maximum database size, and the remaining hard boundaries are so large that most organizations will never approach the maximums. Microsoft SQL Server supports a maximum database size of 524 petabytes (536,576 terabytes). For many years, the maximum table size in Oracle has been limited by the maximum file size in the operating system, which on the popular linux file system btrfs is eight exabytes (eight million terabytes).

Methods and strategies for hardware architecture and configuration are well understood, and the limiting factor for performance is typically budgetary, not technological. Licensing costs for database engines like Microsoft SQL Server, IBM DB2, and Oracle can grow quickly by the time you include all the capabilities required to effectively handle large volumes of data. Enterprise class storage solutions can handle large quantities of data, but they also come with a large price tag. However, these tools are well known to hospital IT staff, and well supported by clinical and financial application vendors.

Hadoop, a leading big data engine, is open source and has no licensing costs. It is designed to run on commodity hardware, which reduces the initial capital expense of deploying a system. However, the supporting tools are not as mature, and people with expertise are difficult to find. There are lots of database administrators with seven years of experience; the only people with seven years of Hadoop experience are the team at Yahoo that invented it. Yahoo also owns the largest known Hadoop deployment, with about 42,000 nodes with a total of between 180 and 200 petabytes of storage. Their standard node has between 8 and 12 cores, 48 GB of memory, and 24 to 36 terabytes of disk.

Careful consideration should be given to the capacity, technology, staffing, and cost tradeoffs between traditional database engines and big data tools.

Healthcare Big Data: Velocity

The speed at which some applications generate new data can overwhelm a system’s ability to store that data. Data can be generated from two sources: humans, or sensors. We have both sources in healthcare. With a few exceptions like diagnostic imaging and intensive care monitoring, most of the data we use in healthcare is entered by people, which effectively limits the rate at which healthcare organizations can generate data.

Like a hospital, Facebook’s data is generated by people. In September 2013, Harrison Fisk, Manager of Data Performance at Facebook, spoke at Oracle OpenWorld about their use of MySQL, a popular open source database. Nobody considers MySQL a big data tool; it’s a traditional SQL database often thought of as not scaling well. Fisk said their MySQL deployment can process a peak rate of 11.2 million row changes (insert, edits, and deletes) per second. Facebook has 1.1 billion monthly active users to generate that data. A large health system with 3,000 total beds likely has about 15,000 full time equivalent employees. No matter how much time those employees spend charting, they are unlikely to generate data fast enough to overwhelm a typical SQL database.

Healthcare Big Data: Variety

There are three different forms of data in most large healthcare institutions. Discretely codified billing and clinical transactions are well suited for relational data models. Digital capture and management of diagnostic imaging studies required the development of specialized data formats, communication protocols, and storage systems. While these PACS systems are not typically recognized as big data, they clearly meet the criteria we have outlined here.

The third form of data in healthcare consists of blobs of text, typically generated to document an encounter or procedure. While stored electronically, there is very little analysis done on this data today, because SQL is not able to effectively query or process these large strings. Natural language processing has been around since the 1950’s, but progress in the field has been much slower than initially expected. The accuracy and reliability of the results produced by this technology do not yet meet the requirements of most clinical analytic use cases. There is much opportunity for progress in this area, particularly for clinical research.

Healthcare Analytics and Deeper Insight

Data analytics, wisely used, can create business value and competitive advantage. Compared with many other industries, healthcare has been a late adopter of analytics. Most health systems have lots of opportunities to improve clinical quality and financial performance, and analytics are required to identify and take advantage of those opportunities.

It’s a long journey for most organizations to develop of culture of continuous, data-driven improvement. Can big data help your healthcare organization along this journey? Hopefully you now have a framework to help guide your thinking.

Learn why when it comes to predictive analytics, sometimes big data is a big miss. Or see why advanced analytics can’t solve all of health care’s problems.

What do you see when you consider big data for health care? What does the future hold?

About the Author: Jared Crapo joined Health Catalyst in February 2013 as a Vice President. Prior to coming to Catalyst, he worked for Medicity as the Chief of Staff to the CEO. During his tenure at Medicity, he was also the Director of Product Management and the Director of Product Strategy. Jared co-founded Allviant, a spin-out of Medicity, that created consumer health management tools. In his early career, he developed physician accounting systems and health claims payment systems.

This article was originally published on Health Catalyst and is republished here with permission. Enlarged image can be seen in original article.