By Ashish Jha

By Ashish Jha

Twitter: @ashishkjha

Now we’re giving star ratings to hospitals? Does anyone think this is a good idea? Actually, I do. Hospital ratings schemes have cropped up all over the place, and sorting out what’s important and what isn’t is difficult and time consuming. The Centers for Medicare & Medicaid Services (CMS) runs the best known and most comprehensive hospital rating website, Hospital Compare. But, unlike most “rating” systems, Hospital Compare simply reports data on a large number of performance measures – from processes of care (did the patient get the antibiotics in time) to outcomes (did the patient die) to patient experience (was the patient treated with dignity and respect?). The measures they focus on are important, generally valid, and usually endorsed by the National Quality Forum. The one big problem with Hospital Compare? It isn’t particularly consumer friendly. With the large number of data points, it might take consumers hours to sort through all the information and figure out which hospitals are good and which ones are not on which set of measures.

To address this problem, CMS just released a new star rating system, initially focusing on patient experience measures. It takes a hospital’s scores on a series of validated patient experience measures and converts them into a single star rating (rating each hospital 1 star to 5 stars). I like it. Yes, it’s simplistic – but it is far more useful than the large number of individual measures that are hard to follow. There was no evidence that patients and consumers were using any of the data that were out there. I’m not sure that they will start using this one – but at least there’s a chance. And, with excellent coverage of this rating system from journalists like Jordan Rau of Kaiser Health News, the word is getting out to consumers.

Our analysis

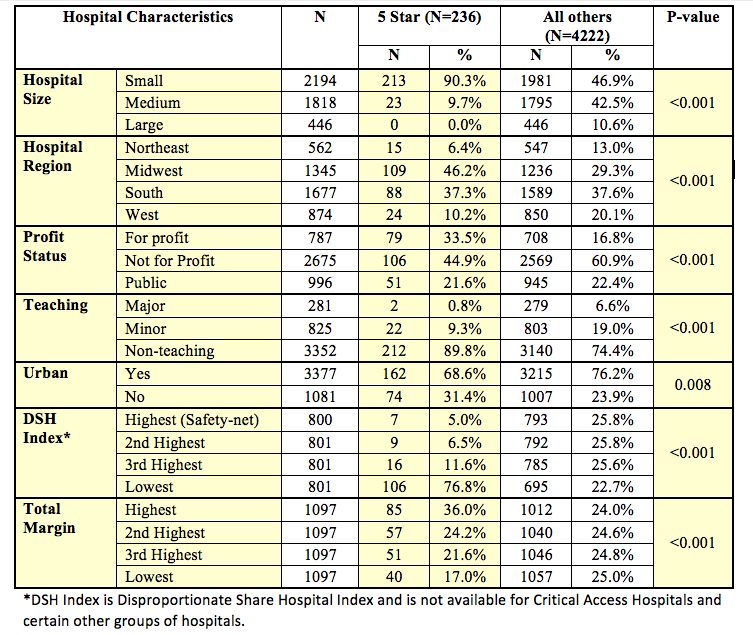

In order to understand the rating system a little bit better, I asked our team’s chief analyst, Jie Zheng, to help us better understand who did well, and who did badly on the star rating systems. We linked the hospital rating data to the American Hospital Association annual survey, which has data on structural characteristics of hospitals. She then ran both bivariate and multivariable analyses looking at a set of hospital characteristics and whether they predict receiving 5 stars. Given that for patients, the bivariate analyses are most straightforward and useful, we only present those data here.

Our results

What did we find? We found that large, non-profit, teaching, safety-net hospitals located in the northeastern or western parts of the country were far less likely to be rated highly (i.e. receiving 5 stars) than small, for-profit, non-teaching, non-safety-net hospitals located in the South or Midwest. The differences were big. There were 213 small hospitals (those with fewer than 100 beds) that received a 5-star rating. Number of large hospitals with a 5 star rating? Zero. Similarly, there were 212 non-teaching hospitals that received a 5-star rating. The number of major teaching hospitals (those that are a part of the Council of Teaching Hospitals)? Just two – the branches of the Mayo Clinic located in Jacksonville and Phoenix. And safety net hospitals? Only 7 of the 800 hospitals (less than 1%) with the highest proportion of poor patients received a 5-star rating, while 106 of the 800 hospitals with the fewest poor patients did. That’s a 15-fold difference. Finally, another important predictor? Hospital margin – high margin hospitals were about 50% more likely to receive a 5-star rating than hospitals with the lowest financial margin.

Here are the data:

Interpretation

There are two important points worth considering in interpreting the results. First, these differences are sizeable. Huge, actually. In most studies, we are delighted to see 10% or 20% differences in structural characteristics between high and low performing hospitals. Because of the approach of the star ratings, especially with the use of cut-points, we are seeing differences as great as 1500% (on the safety-net status, for instance).

The second point is that this is only a problem if you think it’s a problem. The patient surveys, known as HCAHPS, are validated, useful measures of patient experience and important outcomes unto themselves. I like them. They also tend to correlate well with other measures of quality, such as process measures and patient outcomes. The star ratings nicely encapsulate which types of hospitals do well on patient experience, and which ones do less well. One could criticize the methodology for the cut-points that CMS used for determining how many stars to award for which scores. I don’t think this is a big issue. Any time you use cut-points, there will be organizations right on the bubble, and surely it is true that someone who just missed being a 5 star is similar to someone who just made it. But that’s the nature of cut-points – and it’s a small price to pay to make data more accessible to patients.

Making sense of this and moving forward

CMS has signaled that they will be doing similar star ratings for other aspects of quality, such as hospital performance on patient safety. The validity of those ratings will be directly proportional to the validity of the underlying measures used. For patient experience, CMS is using the gold standard. And the goals of the star rating are simple: motivate hospitals to get better – and steer patients towards 5-star hospitals. After all, if you are sick, you want to go to a 5-star hospital. Some people will be disturbed by the fact that small, for-profit hospitals with high margins are getting the bulk of the 5 stars while large, major teaching hospitals with a lot of poor patients get almost none. It feels like a disconnect between what we thinks are good institutions and what the star ratings seem to be telling us. When I am sick – or if my family members need hospital care, I usually choose these large, non-profit academic medical centers. So the results will feel troubling to many. But this is not really a methodology problem. It may be that sicker, poor patients are less likely to rate their care highly. Or it may be that the hospitals that care for these patients are generally not as focused on patient-centered care. We don’t know. But what we do know is that if patients start really paying attention to the star ratings, they are likely to end up at small, for-profit, non-teaching hospitals. Whether that is a problem or not depends wholly on how you define what is a high quality hospital.

About the Author: Dr. Ashish K. Jha is a practicing Internist physician and a health policy researcher at the Harvard School of Public Health. This article was originally published on his blog, An Ounce of Evidence.